Why is almost no one in AI talking about Cloudflare?

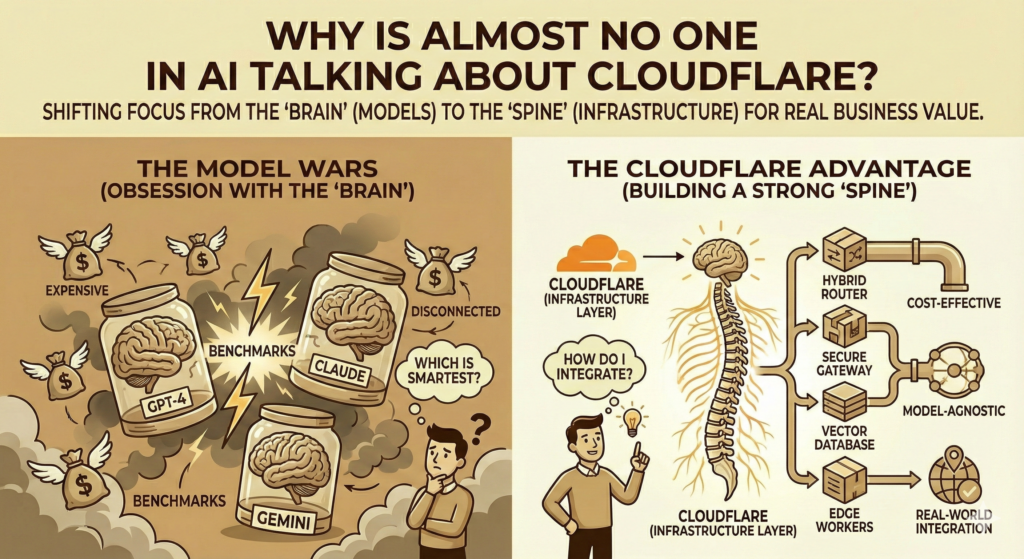

While everyone fights over which "brain" is smartest (GPT-4 vs Claude vs Gemini), are we ignoring the "spine" that makes AI actually work for business processes?

The rub: If you scroll through your feed right now, it is a battlefield of benchmarks. “Claude 3.5 writes better code.” “Gemini 1.5 reads more books.” “GPT-4o is faster.”

But if you are building for a Mid-Market company (where clients don’t have a blank check to give you) but you do have a significant number of real users or staff who need world class service, are we asking the wrong questions when making platform decisions? Perhaps we shouldn’t just be asking “Which model is smartest?” We should be asking “How do I affordably integrate this intelligence into my messy, real-world business?”

The answer may (or may not) be Cloudflare, but strangely, I hear almost nothing about them.

While the giants fight for dominance of the “Model Layer,” Cloudflare seems to be quietly focussed on taking a leadership position on the “Infrastructure Layer” and perhaps flying under the radar… For the Mid-Market, perhaps they are not just another vendor; perhaps they are the central nervous system for AI we never knew we needed?

The "Brain" vs. The "Nervous System"

I think of the major LLMs ( OpenAI , Google , Microsoft Copilot ) as massive, expensive supercomputers, giant brains floating in a jar. They are brilliant, but they are disconnected. Frustratingly so.

To use them, you need to send them data. You need to secure that data. You need to format it. You need to remember what they said.

So the question is has Cloudflare built the nervous system? Through Workers AI, Vectorize, and AI Gateway, they provide the connectivity that lets you route information to these brains only when you need to, while handling 80% of the workload at the edge for a fraction of the cost of the other providers. All within a highly secure environment.

The "Hybrid" Architecture

Here’s why I think a solution like Cloudflare’s might be the “Unfair Advantage” the SMB & Mid-Market needs to compete with the upper end of town: The Hybrid Router.

In a pure “Buy” model, you send every user interaction to GPT-4. That’s $0.03 here, $0.10 there. At scale, the costs mount… fast.

With Cloudflare as your spine, it seems you can build a “triage” system:

- The Doorman (Cloudflare Workers AI): A request comes in. A cheap, open-source model (like Llama 3 hosted on Cloudflare) analyses it for $0.001. “Is this complex?”

- The Route: a) Simple request (e.g., “Reset my password”): The Cloudflare worker handles it instantly. Cost: Near Zero. or ; b) Complex request (e.g., “Analyse this legal contract”): Cloudflare routes it to Claude 3.5 Sonnet via API. Cost: Premium, but required and only paying for the specific request.

- The Memory (Vectorize): Cloudflare stores the context in its own vector database, so you aren’t paying OpenAI to “remember” your own customer data.

A "Quick Win" Factory?

For a Mid-Market business engaging an agency to deliver transformation, capital efficiency is critical to minimising the total cost of ownership and cash outflows. Designing the transformation in dedicated, independent stages allows the improvements generated by Stage 1 to pay for, or at least offset, the costs of Stage 2 and so on.

In that regard, what I’m finding interesting about Cloudflare’s ecosystem is that it allows for “lego-block” development that deliver immediate value, amplifying the cost offset effect (thereby minimising the cashflow impact for the business) achieved through intelligent project staging/timing.

Some of the things you might like target as quick wins are:

- The “Smart” Inbox: Don’t pay for a SaaS tool. Use Email Workers to catch invoices, read them with a small model, and push them to your ERP.

- Compliance Walls: Use AI Gateway to automatically redact PII (credit cards, phone numbers, addresses) before the data ever leaves your perimeter to hit OpenAI.

- RAG for Peanuts: secure your internal documentation search using Vectorize. It scales to zero when no one is using it, unlike a dedicated AWS vector instance that bills you while you sleep.

The Verdict

The “Model Wars” are exciting, but are they a distraction? Surely models are likely to become commodities; Will intelligence become cheap and interchangeable? or perhaps there will be one model to rule them all once AGI becomes a reality?

If that comes to be, is the real “moat” for your business your architecture and how you build intelligent, interoperable, scalable and secure solutions that make a difference to your employees and customers around those models?

If you build your application directly on top of a single model provider, you risk lock in. If you build on a Connectivity Cloud like Cloudflare, you become model-agnostic. You can swap GPT-5 for Claude 4 next year with one line of code changes.

In short, should we stop looking for a smarter brain? Should we consider building a stronger spine? I mean, there’s no point in having the world’s biggest and smartest head if your body can’t hold it up, right!?